Recently, Substack released a feature where you can experiment with different titles. Substacking about subtack on Substack is admittedly on bad form, so I will keep this short.

My main project on Substack is over at CuringCrime, where we publish weekly and where I carried out two experiments. The new feature allows you to send the same newsletter with different titles to a subset of your readers and see how they perform. You can change the duration and the size of the experiment.

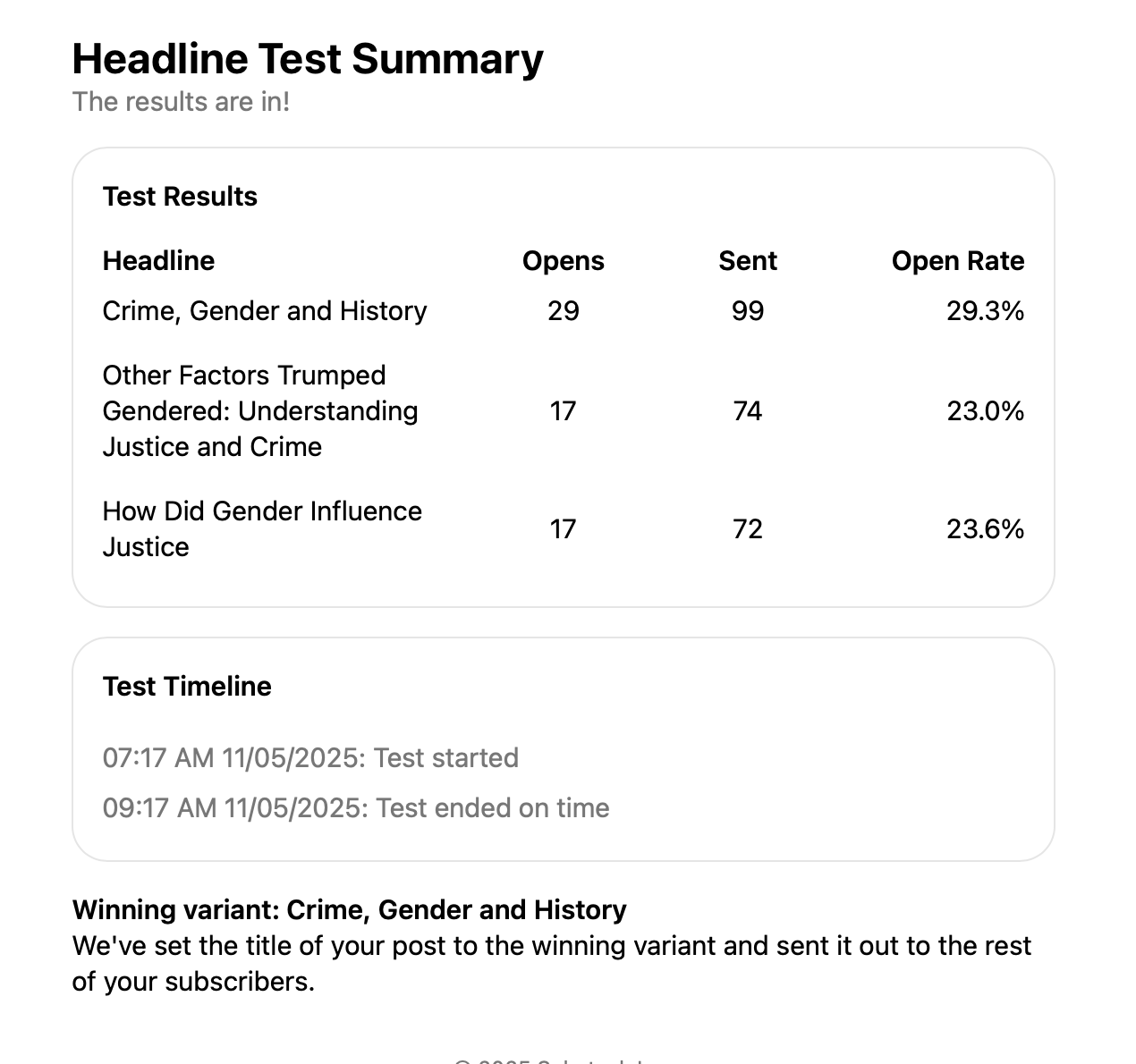

I tested titles on two different articles.

The first summarises research presented at a conference about the role of gender on justice, which reveals a world that is:

“more intricate, logical, and alien. These wonderful case studies indicate that the past is as fascinating and sophisticated as the present and that understanding it demands thorough investigation.”

Maybe all my titles suck equally. Are those decent open rates? Are those numbers even significant?

You can read this post here:

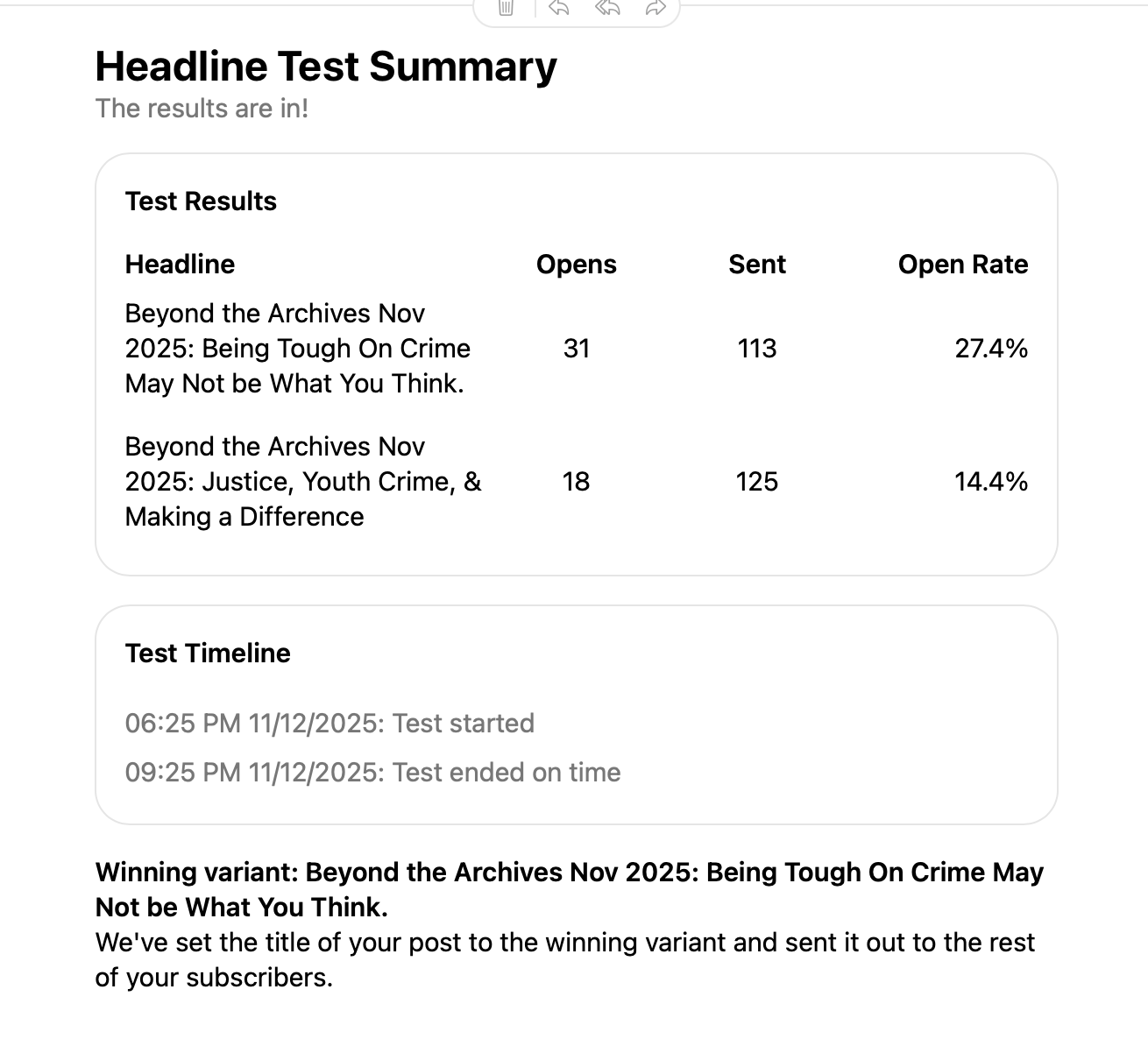

For my second test, I chose to use one of the interviews that is part of our Beyond the Archives Series. I wrote,

I am humbled and often impressed by the people we interview. Steven Teske graciously participated in this effort. The result is wonderful, and reaffirms my belief that Substack has lots of value, and that a positive impact can be had.

You can read this wonderful interview below:

I tried the following titles.

I must admit, I liked the first one better than the second one.

Parting Thoughts

I did not find this particularly useful because, I think, that to be significant it would need to be done at a much wider scale. We have about 500 subscribers over at CuringCrime, and while this test did survey a significant number of them, I am unsure what I can learn from these results.

Some questions also remain. Does the test consider where subscribers are? For example, a significant portion of our Subs come from the United States, and the time at which the first test was done would make it unlikely for any American reader to open their email. If the system does not take this into account, could the results be skewed?

Usually, our open rates are 25-34%. Maybe my titles are terrible, and no one wants to read them. Maybe they are too academicky.

It seems that this kind of test could be useful, but it would be most useful once someone has a big following. For accounts with fewer subscriptions, it seems gimmicky.

Lastly, the historian in me is somewhat uncomfortable about the same newsletter living in two email boxes with different titles.

Until Next Time, and do